Emerging UI/UX patterns in Gen AI powered products (part 1)

New programming paradigm, new UX?

One of the fascinating side effects of the Gen AI boom is a chance for new UI/UX patterns to emerge across both consumer and business oriented software. Why is this the case? The probabilistic and non-linear nature of LLMs poses a challenge to traditional SaaS product designs: when your product responds dynamically to a user, it is hard to predict what the next state or UI should be.

In this article, I feature a few of the new modalities I've been seeing across both more established companies and startups.

1. Chat

The first, and most common approach to incorporating Gen AI into new products has been via a chat window. OpenAI popularized this with ChatGPT, and it helps in any scenario where a user wants an answer to a question.

A lot of other companies are including chat based GenAI features into their products. I’ve seen two versions of this. We can say the ‘shallow’ version of this approach simply takes a user's input, and runs it through a model, and spits text back. While I call this shallow this can be exactly what's needed by a product, and I don't mean to assign a negative value to this approach. On the other hand a more integrated or assistant approach takes the chat input, and does something natively within the application itself with the user input.

‘Shallow’ Chat

I've been seeing the `shallower` approach, whenever an application needs to let you find, retrieve or summarize something you did previously. For example chat makes a lot of sense in the the AI IDE Cursor, where I often find myself asking questions with global context enabled, since our code base is pretty large.

Assistant Approach

Some Assistant Approaches I've seen include Klaviyo's segment builder which now lets you build customer segments with natural language.

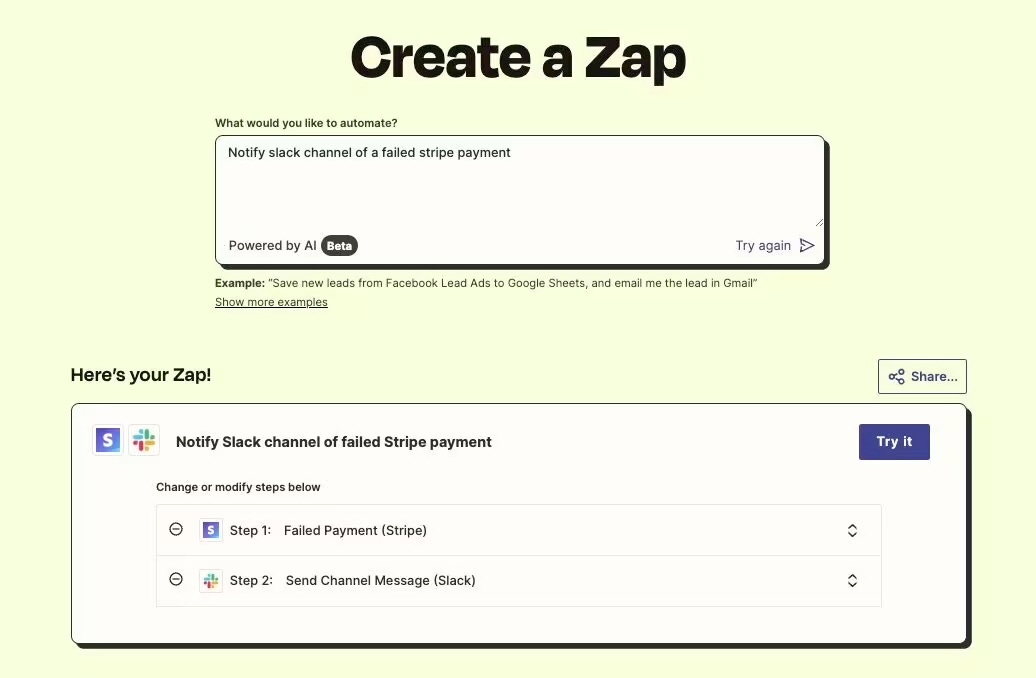

Similarly you can chat with Zapier to link together existing automations (zaps) based on a text input.

I would be interested to see data on whether features built with the assistant approach are retaining - I imagine they are good at helping users get started, but I also wonder if the current AI model capabilities are good enough to help users with complex product tasks. If they are good at getting users started, I also wonder if they provide a bigger uplift to product templates or are able to complement them in some way. Templates have been a great solution to product onboarding for the last few years.

2. Diffs and In-Painting

Anyone who works with code, will be familiar with the idea of diffs - a visual way to see changes between two versions of something. For more complex tasks AI models are great at producing work some of which will be useful for a users while some will not be. Giving users a way to take just a part of the output they like and reject the part they don't is a great use of diffs.

Diff

Cursor does this particularly well with their inline tool. When i highlight a piece of code, ask to modify it, and then see the proposed changes I can accept those that make sense. I think more products should incorporate this especially for complex writing, and designing tasks. You could imagine an AI infused Figma generating many different changes to a single page and then you select the parts you like and reject the parts you don't without wholesale acceptance or rejection.

In-paint

A slight variation of this can be found with image editors and the idea of in-painting. Midjourney lets you select parts of an image to re-prompt and edit, and I think this modality is underutilized in general.

3. Node based flows

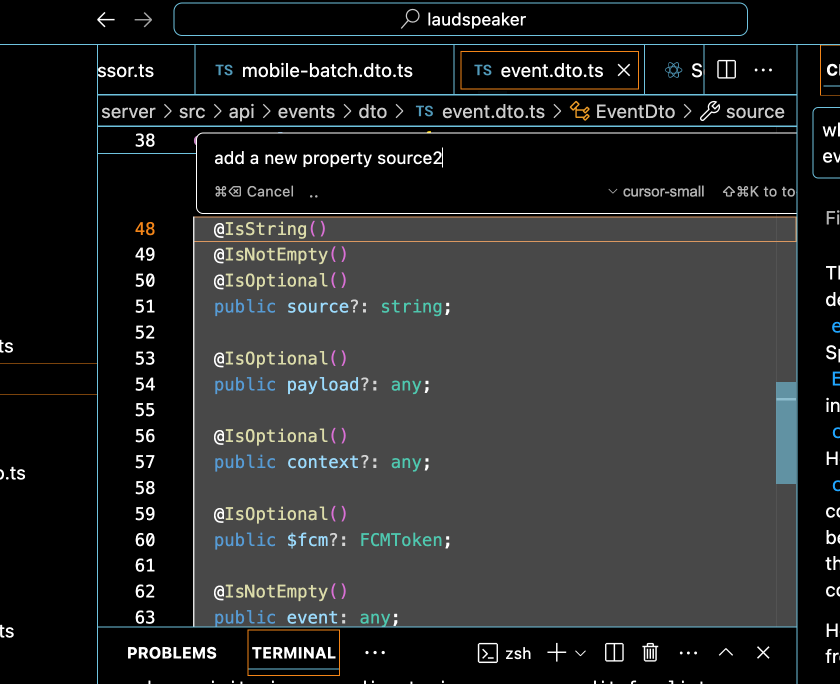

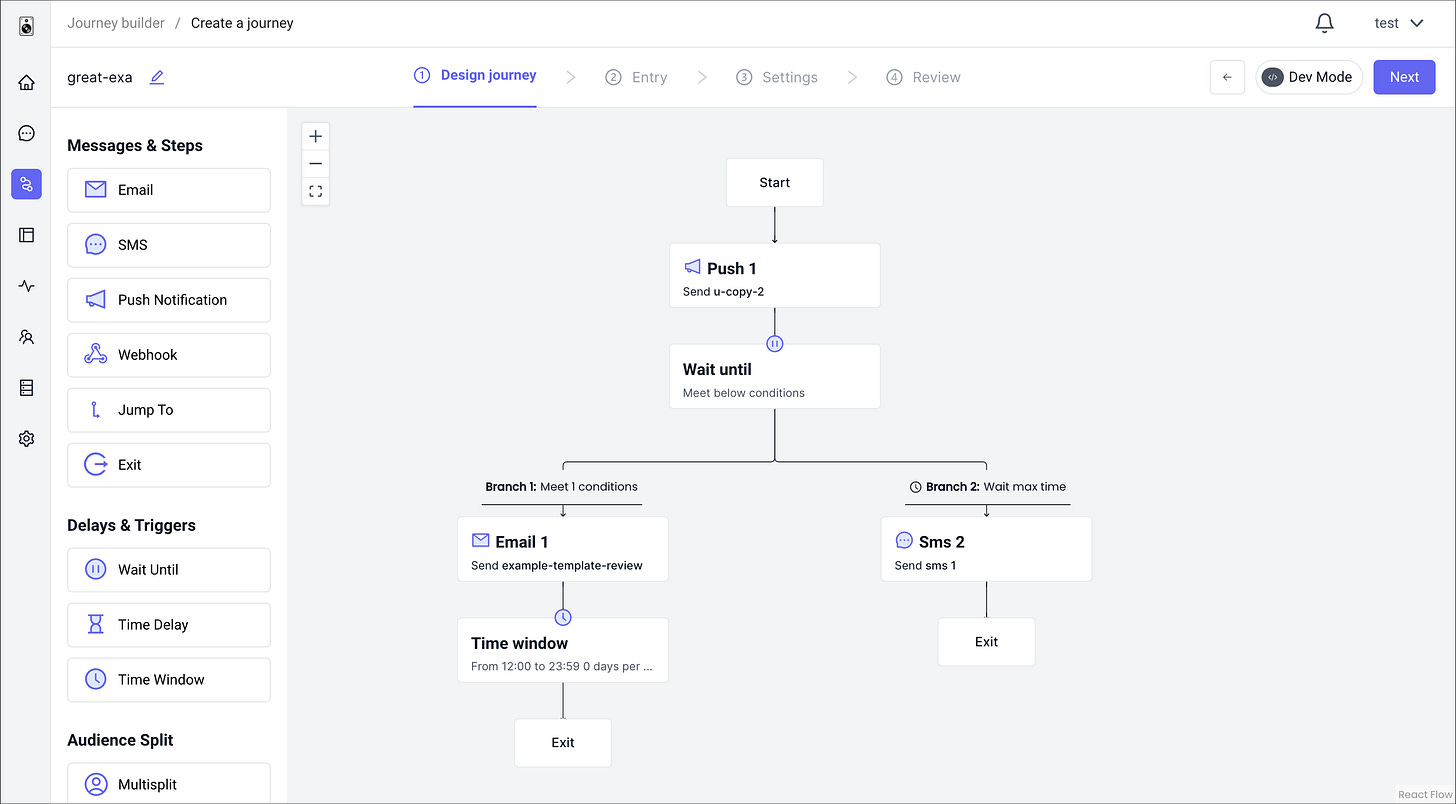

Node based applications have been experiencing a renaissance of sorts. Our own application Laudspeaker uses nodes heavily

so its been fun to watch this trend over the last few months!

Nodes are a great approach anytime you want your users to be able to 1) create complex branched paths or workflows; or alternatively 2) explore many different variations.

Workflow

vellum ai has created a no-code tool to create AI workflows. There's a clear entry point, and then you can make api calls or prompt models , strip parts of the response and use that in other prompts and so and so forth.

Variations

An example for exploring variations is super ai. Super helps industrial designers prototype.

You can see parent nodes, and the prompts that led to child nodes, and can go back and forth and make changes as needed.

I think this generative model along with in-painting holds a lot of promise for work that needs quick prototyping and iteration.

Next

In my next post I’ll write up a few cases studies of how startups are incorporating these patterns. If you have any suggestions of start-ups to look at please comment!